Organisation involved:

Barcelona Supercomputing Center-Centro Nacional de Supercomputación (BSC-CNS) is the national supercomputing centre in Spain. BSC specialises in high performance computing (HPC) and manages MareNostrum V, one of the most powerful supercomputers in Europe. BSC is at the service of the international scientific community and of industry that requires HPC resources. Its multidisciplinary research team and computational facilities – including MareNostrum – make BSC an international centre of excellence in e-Science.

Codes involved:

Alya: https://services.excellerat.eu/en/services/application-software/alya/

Sod2D: https://services.excellerat.eu/en/services/application-software/sod2d/

Technical / scientific challenge:

Handling complex aircraft geometries and discretizing the computational domain with high-order meshes poses significant challenges, particularly in achieving accurate yet energy efficient simulations. High-fidelity turbulence modeling, such as Wall-Modeled Large Eddy Simulations (WMLES), requires massive computational resources and optimized numerical methods. Additionally, ensuring optimal performance of a Fortran-based CFD solver on multiple GPUs is a non-trivial task due to differences in memory hierarchy and parallel execution strategies.

Beyond simulation performance, pre- and post-processing large-scale datasets—potentially reaching billions of degrees of freedom (DoF)—demands efficient I/O management and visualization tools. Traditional CPU-based methods struggle with long processing times and high energy consumption, making GPU acceleration a crucial aspect of this work and its future sustainability.

Solution:

To overcome these challenges, a hybrid approach combining commercial software and in-house tools was implemented to generate high-order meshes with optimized quality. OpenACC was used to efficiently parallelize the Fortran-based solver based on the spectral element method (SEM), ensuring compatibility with GPU architectures.

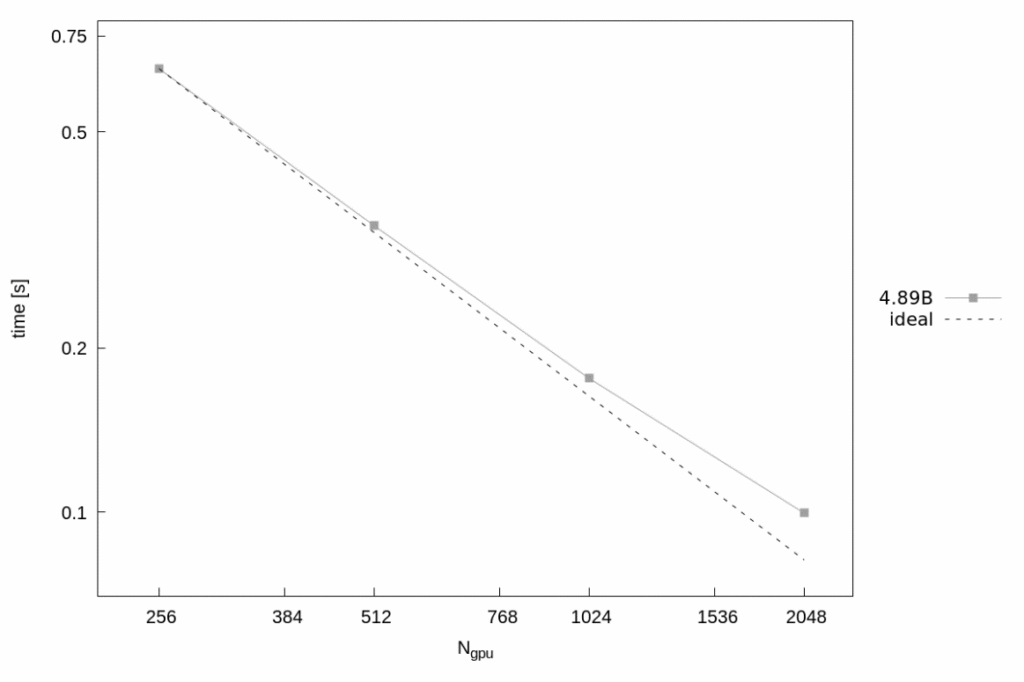

Furthermore, a key breakthrough was leveraging NVIDIA’s HPC compilers, allowing seamless GPU acceleration of computationally intensive routines. This resulted in a substantial speedup of flow simulations while maintaining numerical stability and accuracy. Additionally, efficient domain decomposition techniques were employed to distribute the workload across up to 2048 GPUs, enabling near-linear scaling for large-scale aircraft simulation as seen in Figure 1.

For pre- and post-processing, optimized parallel I/O strategies were implemented to handle large datasets efficiently. High-performance visualization tools were integrated to facilitate real-time data analysis, reducing the bottlenecks traditionally associated with CFD post-processing.

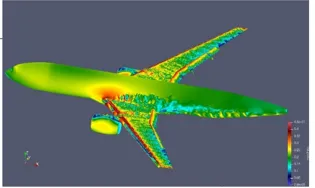

Figure 1: Computed flow field

Impact:

Enhancing the use of GPUs to accelerate large-scale CFD simulations has resulted in substantially faster time-to-solution and significantly improved energy efficiency compared to CPU-based approaches, offering a more sustainable and cost-effective pathway for aircraft design and aerodynamics research. The simulations were completed approximately 20× faster on a single Accelerated Partition (ACC) node of MareNostrum V than on a General Purpose Partition (GPP) node. Furthermore, the study demonstrated that the GPU-based solver consumed between 10 and 12 times less energy than the CPU-based counterpart to complete the same simulation under identical hardware configurations. The improved solver capabilities enabled high-fidelity simulations of aircraft aerodynamics, including the precise prediction of turbulent flow structures (see Figure 1). This advancement allows for more accurate estimations of drag, lift, and noise reduction strategies, directly benefiting the aerospace industry by optimizing aircraft performance and fuel efficiency.

Additionally, the research community benefits from this work through open access to optimized HPC workflows, enabling more institutions to leverage Exascale technologies for fluid dynamics simulations. The knowledge gained from this project is applicable not only to aeronautics but also to wind energy, climate modelling, and automotive aerodynamics.

Figure 2: Solution time per iteration for flow around aircraft with 4.9 Billion Degrees of Freedom, max 2048 GPUs