Overview

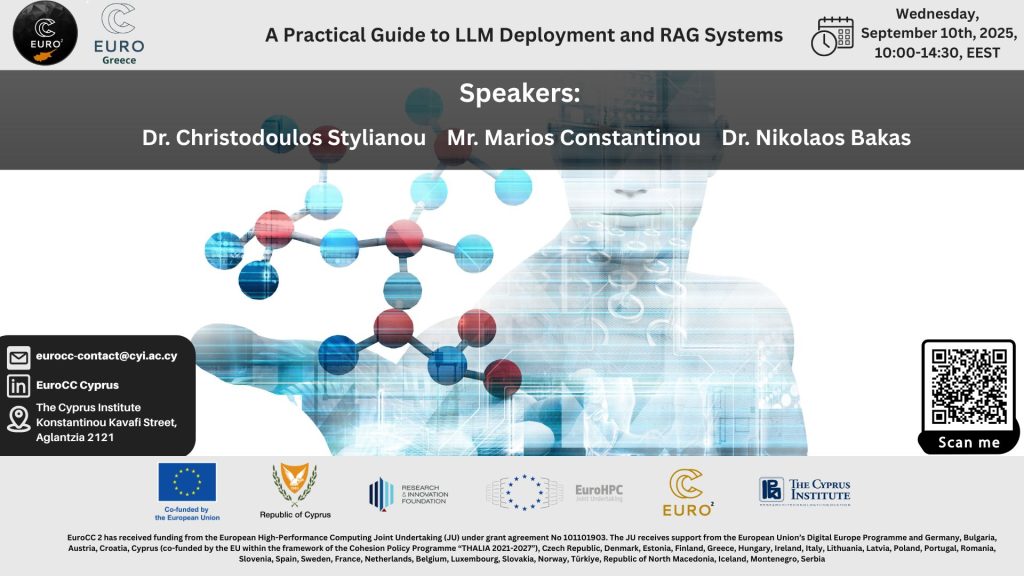

NCC Cyprus and NCC Greece invite you to a 4.5-hour hybrid training event on deploying and working with Large Language Models (LLMs) in self-hosted and high-performance computing environments. The program will combine lectures and hands-on sessions, focusing on practical workflows for local deployment, inference, and retrieval-augmented generation (RAG). Participants will learn how to use the Hugging Face ecosystem for transformers and embeddings, experiment with inference and semantic similarity techniques, and build retrieval-enabled applications. The hands-on part will include deploying models on Cyclone, the National HPC infrastructure, using tools such as vLLM and Haystack to set up model-serving pipelines and integrate retrieval with generation. The event will take place at the Andreas Mouskos Seminar Room of the Cyprus Institute and online.

Venue

This training event is held as a hybrid event. You are welcome to join us at the Andreas Mouskos Seminar Room, The Cyprus Institute.

Otherwise please, connect to our live stream of the discussion, available on Zoom (Password: VsSCz1)

Registration

Registration for this event is open until Monday, September 8th, 2025. Registration form here.

Requirements

Attendees should bring with them their own laptop (with Administrative privileges) to follow the hands-on practical. They should make sure the following software is available on their laptops: a) A web browser and PDF viewer and b) A command line interface or other client that supports SSH.

Attendees should be able to access the machine prior to the event. Instructions on how to generate the SSH key can be found at Accessing and Navigating Cyclone Tutorial. We recommend generating the SSH key via Git Bash for Windows (2.5.2. Section – Option 2 on the tutorial).

Agenda

09:30-10:00: Hands-on Setup (Optional)

Please use this session to ensure you can access the HPC system.

10:00-10:45: Deploying Large Language Models Locally

This presentation covers the process of deploying large language models on local machines and high-performance computing systems. It focuses on the tools and workflows needed to run models efficiently without relying on cloud infrastructure.

The talk will include practical tips for setting up environments, managing resources, and avoiding common issues during deployment. It will also introduce retrieval-augmented generation (RAG) systems and explain how they can be used to improve model responses with local or custom data. The goal is to provide a clear, practical overview for anyone interested in working with LLMs in a self-hosted environment.

10:45-12:00: A Practical overview of Transformers, Embeddings and RAG Systems

In this session, we will present how to set up and use Large Language Models (LLMs) for various tasks, using the Hugging Face Transformers library. We will cover techniques for inference and text generation, including streaming outputs, and utilize embeddings to understand and visualize semantic relationships between words and sentences using cosine similarity. A key focus of the seminar will be on explaining the basics of Retrieval Augmented Generation (RAG), where we will demonstrate how to build a system that retrieves relevant information from a text corpus to answer user questions. By the end of this session, you will have hands-on experience with powerful LLM tools and an understanding of how to build custom LLM applications that combine language generation with information retrieval.

12:00-12:30: Break

12:30-14:30: Hands-On: Model Deployment through vLLM, Communication and Creation of RAG Pipelines

In this hands-on session, participants will deploy large language models on Cyclone, the National High Performance Computing (HPC) infrastructure, using tools like vLLM for efficient inference and Haystack for building retrieval-augmented generation (RAG) pipelines. The session will guide attendees through the end-to-end process of setting up model environments, running local inference, and integrating retrieval components to create responsive, data-aware applications. By working directly on HPC resources, participants will gain practical experience in managing compute workloads, handling model-serving pipelines, and building systems that combine LLM outputs with relevant external knowledge.

Pre-required logistics

Attendees should be familiar with Python. Some previous experience with PyTorch. Hands on exercises are part of the training and will be provided in Python.